AI is Pollution

The destruction of the internet, and the end of AI.

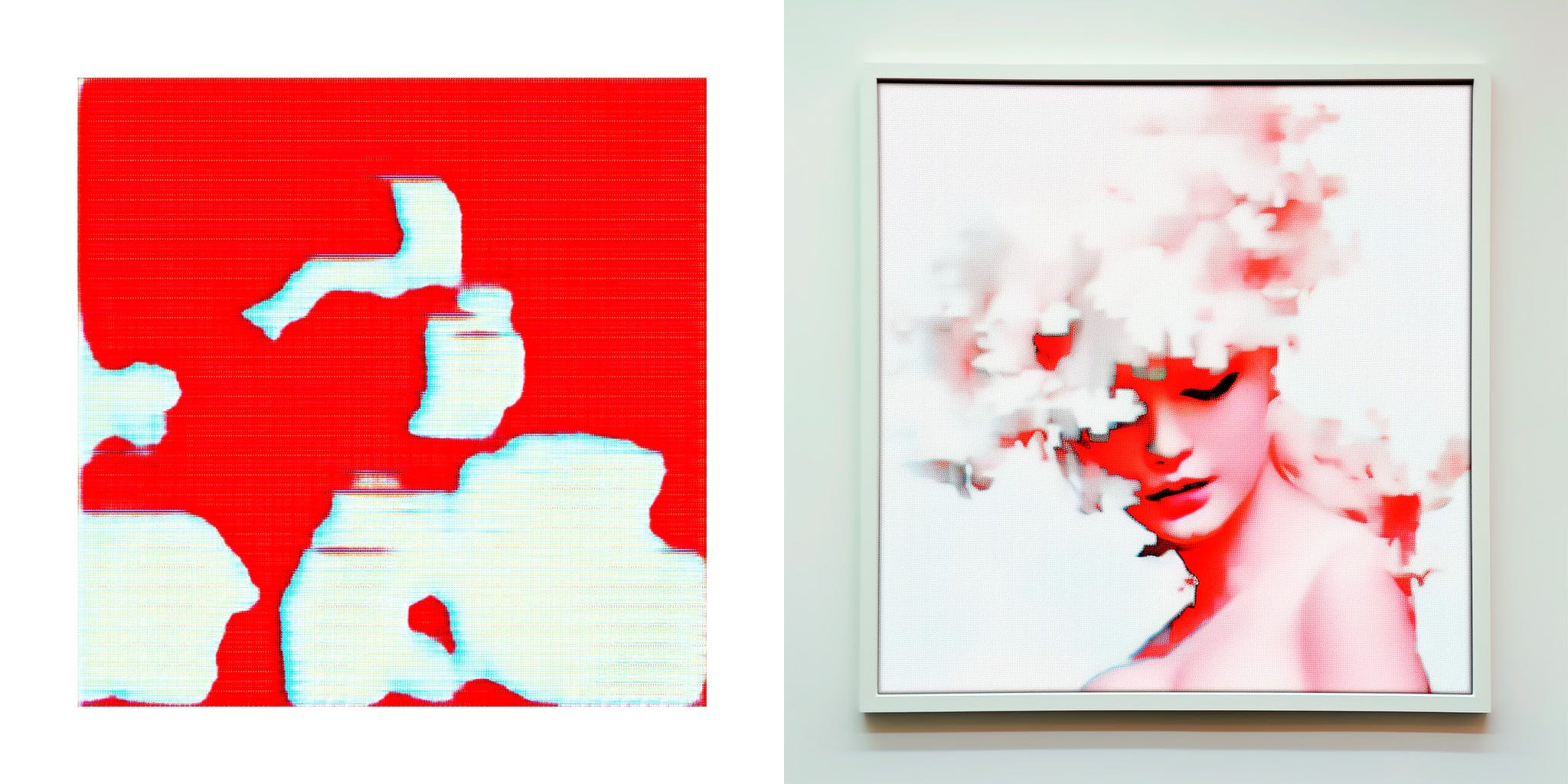

I recently uploaded an image of one of my "Tabula Rasa" works into Midjourney – a popular generative AI tool. I asked Midjourney to create an image, showing that "Tabula Rasa" work "framed and hanging on a wall." The result was, to say the least, surprising.

The original "Tabula Rasa" work that I uploaded was an image created by training an AI/GAN on just two solid colors – red and white. The project, which I've written about previously, was intended to explore the limits on how little an AI could be trained on, and what that might reveal about its inner state, or emotions. The “Tabula Rasa” results were abstract images that highlighted the strange mix of organic and machine behind AI. But when Midjourney placed the image, framed and on a wall, it, for some strange reason, added a woman's face.

One of the themes which has run through all of my work with Artificial Intelligence is the question of what the AI "sees." Does it see the world as we do? Or does it takes the inputs (ie. images) that we feed it, and interpret, or understand, them in some way which is completely foreign to us? When it generates new images based on what we have shown it, are we misinterpreting its output? Is it creating something obvious to itself, but invisible to us?

I've searched for that invisible knowledge in my "Manipulations" series. Those works begin with photographs that I've taken. I then use those images to train the machine which, in turn, generates new images. Finally, I take the generated images and manipulate them in search for hidden patterns.

But I've long wanted to create a series where I skip the step where I start with the photos that I've taken, and instead start by training the machine on the images that it has generated from previous projects of mine. Would those hidden patterns be obvious to the machine? And would the resulting newly generated images reveal this machine-specific knowledge? Or would it all remain hidden, always and only visible to the unique eye of the AI?

My Midjourney experiment differed from that proposed series, because I didn't train the machine. Instead it used Midjourney's pre-trained model, known as an LLM, or large language model. LLM technologies are the foundation of the current crop of AI tools – including ChatGPT and Dall-E – which have captured the public imagination. The reason LLMs perform their seeming magic is because they have been trained on virtually the entire internet.

These tools, however, may soon become victims of their own success. For, as people use them, their output, AI generated content, will come to be a significant percentage of what is on the web. The problem is that this content is full of the errors – what's known as the "hallucinations" that plague modern LLMs. In essence, AI generated content is "polluting" the web. And as the next generation of LLMs are trained on this polluted internet, their outputs will become increasingly inaccurate and garbled. In effect, as AI pollution gets worse, the next generation of AI systems will be worse than the current ones. This is known as model collapse. Or, more poetically, the internet is eating its own tail.

"The average human is connected to the internet for 397 minutes each day," writes Jack Self in the Summer 2023 issue of Real Review. Given the internet's algorithmic nature, everything that it feeds us is intended to "sell you something, or sell you to something." Our living consciousness has been shaped by the corporations controlling the internet, "obsessed by the elimination of chance." We now live in a "mirrored reality" where our online lives feel more real than our offline ones. And, as AI generated content pollutes the web, the mirrors only increase, our disconnect becoming greater and greater.

Which brings me back to my Midjourney experiment. It was an instance of using AI to learn from, or at least interpret, AI generated content. And the results revealed not just an erroneous understanding of the original data, but a kind of meta-hallucination, a bias towards realism and photography contained within Midjourney's LLM.

Will AI generated content change the way in which we see the world? Will we soon start to see faces in abstract images? Will Midjourney style-images become our basis for interpreting and understanding our everyday experiences?

Years ago, when my husband and I went on an African safari, one of the first things we said to each other was how much the experience felt like a Disney film. Today I see people in conversations with friends, acting as though they were being filmed for Instagram. The media we are exposed to, and the mediums by which we consume it have always shaped how we live. The internet has only accelerated and intensified the way in which we are influenced.

On one hand, it's fascinating to hypothesize about how the new era of AI may be short-lived, as it pollutes the internet and becomes less reliable. A hyped technology that will soon be eclipsed by whatever next is championed as progress. Alternatively, it's terrifying to imagine that we may become increasingly unaware of the AI-generated errors. In which case we become increasingly influenced and shaped, our consciousness shifted, so that the hallucination-filled internet becomes our reality.