Tabula Rasa

Rethinking the Intelligence of Machine Minds

This essay was originally published in 2019 on Medium (link) and, with an introduction by Jason Bailey, on ArtNome (link).

Introduction

Although AI has been around since the 1950’s, advances over the past decade, particularly in machine learning, have brought about a radical-change in what we call AI.

With this “new AI” programmers no longer encode rules but instead build network frameworks. Then, using large quantities of data, the networks are trained and their components adjusted — or tweaked — until the system starts performing as desired.

Part of the magic, and the enigma, of AI is that we can’t open up a trained network to see what “rules” it has learned, because it doesn’t have any. Its understanding has developed organically, from the bottom up. And, as a result, it is not possible to fully know why the machine behaves as it does.

Despite this mysterious opacity, the future is being built on AI. It is poised to be the latest in the inevitable progression of technologies that brings greater efficiency and optimization of all aspects of our lives — with little regard for any unintended consequences. And so we are resigned to the inevitable disruption that will follow in AI’s wake, cynically aware of how it may be used for the benefit of those in power, and that most people will have very little say in what that future will be.

It is in this context that artists have been exploring AI — as subject matter, as collaborator, and as a medium.

Like AI programmers, artists working with AI don’t encode rules, but instead train networks. The resulting artworks look and feel different from previous forms of computer-based art for they reflect the organic messiness of their vast and inscrutable neural networks.

My own work with AI, created with GANs (generative adversarial networks), exists in contrast to the vast scale of commercial AI. Using small data sets I am exploring how AI builds an understanding the natural world, and how the images I create relate to earlier art movements and technological innovations. (More information is in my previous essay Little AI.)

In this essay, I step back from that broader project in order to examine the very basics of AI. I want to learn what is fundamentally different about art made with AI. Can I then use that difference to create unique images?

Through all of my work I ask: Do we need to develop a new concept of beauty to evaluate what emerges from working with these systems? And is it possible that a new aesthetic can give us a better, more empowered, understanding of AI?

Romanticism

Fundamental to AI and machine learning is the concept of training — teaching the machine to understand its subject. But what does it mean for the machine to “know” something?

In philosophy, John Locke (1632–1704) proposed that the only knowledge humans can have is through experience. He believed the mind to be a tabula rasa — a “white paper” or “blank tablet” — “on which the experiences derived from sense impressions as a person’s life proceeds are written. There are thus two sources of our knowledge and ideas: sensation and reflection.”¹

This sounds remarkably similar to a contemporary AI system and how it learns. Just as with Locke’s conception of the mind, the machine begins as a blank tablet, knowing nothing. It is then trained with data — the “sensation” upon which it “reflects” — in order to learn.

In Locke’s model, one develops rational knowledge from experiences in the natural world. But unfortunately, the data we provide to the machine is not neutral, for embedded within all data are human biases — the preconceptions, irrationalities, and emotions of those who collected it. Even the tools we use to collect that data — from their interfaces that control what we can see, to their underlying APIs, code libraries, and sensor technologies — each adds its own particular bias. The technology stack is, essentially, a stack of bias.

Art created with AI is not exempt from this bias problem. The images that the artist selects to train the machine — whether created by the artist, or collected from other sources — can have numerous layers of human bias.

Given that the machine is reflecting on the sensation of biased data, what makes us think that the machine can possibly be rational? We must consider that the rationality offered by these systems may be an illusion.

In the arts, romanticism developed as a reaction against the scientific rationalism of the enlightenment. It was based on the belief that reason alone can not explain everything, and instead emphasized emotion and individualism, placing the free expression of the artist’s emotions as the most authentic source of aesthetic experience.

Given that we can’t help but use human terms to describe the behavior of AI systems — we say that the machine is “learning,” it “knows” something, it has “skills,” the word “intelligence” is inescapable — perhaps we should look for other human traits. Might machine “emotions” be a new way for us to approach and understand AI? Could romanticism be the foundation for a new AI aesthetic?

Searching for Emotions

Emotions? Really?? This is just code! But… because the technology is inscrutable we haven’t been able to develop alternative metaphors for what it is doing. We remain constrained by language that leaves us with no choice other than to describe the process as if an entity is doing it. So emotions seem to be as good as any other method to build a new understanding about AI. A linguistic placeholder, perhaps, but a place to start.

While rationalism would say that the machine learns emotions from the training data, romanticism instead poses that they are innate. What if, in order to better understand the source of the machine’s emotions, we could train it using data that doesn’t have any embedded emotional bias? Doing so might then allow us to see if the machine exhibits any emotions, and if so, reveal something about their development.

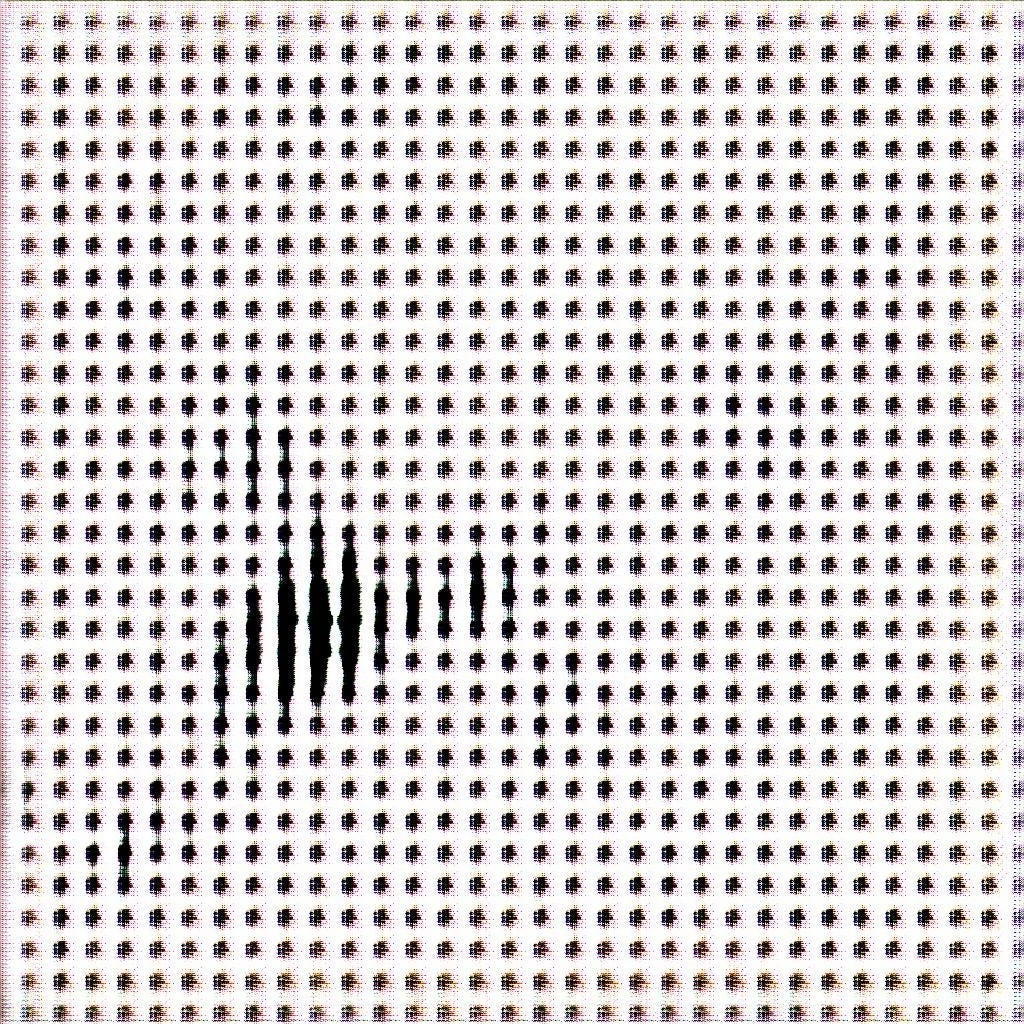

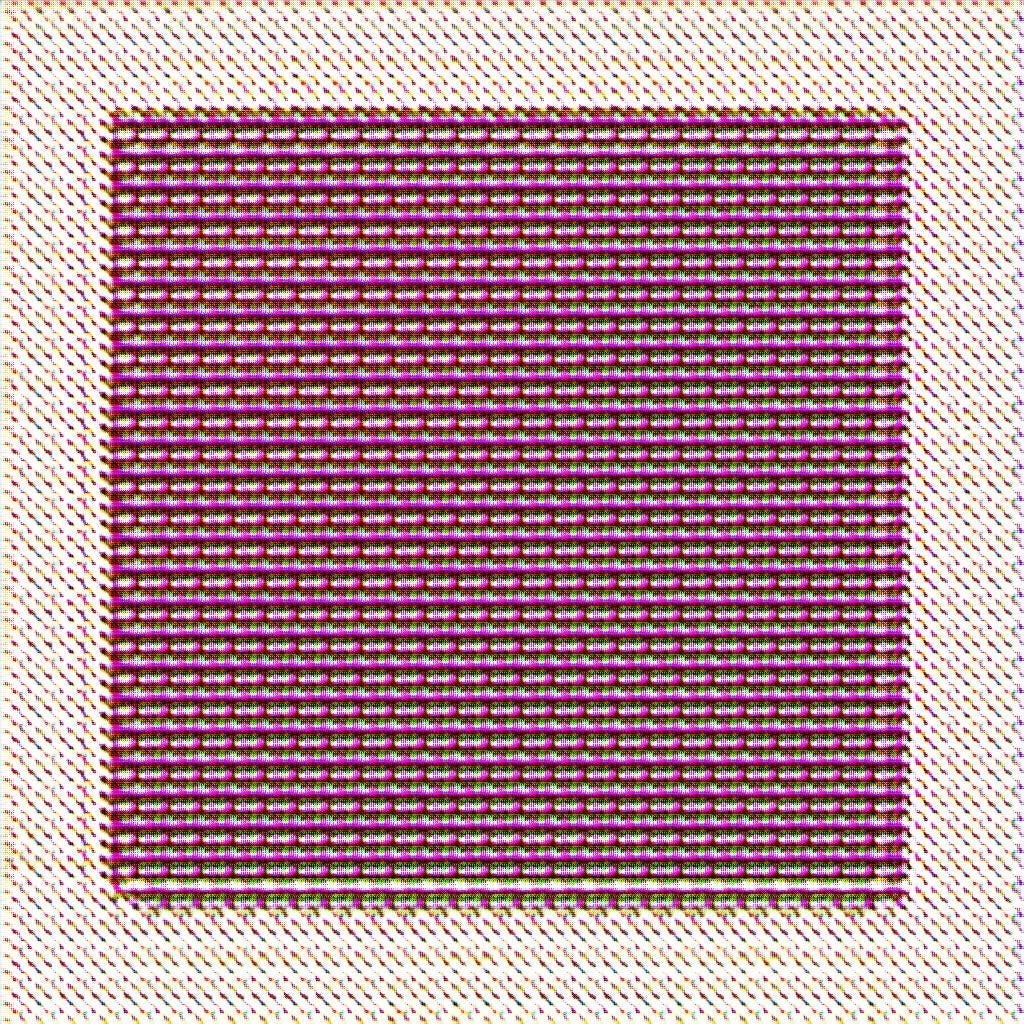

Starting with solid colors, material which is theoretically bias-free, I started training with just a single black image. The machine, seeing nothing but black, learns nothing but black. And while we might imagine it cruel to deprive it of any stimulus, I didn’t really think that I was destroying an innate intelligence.

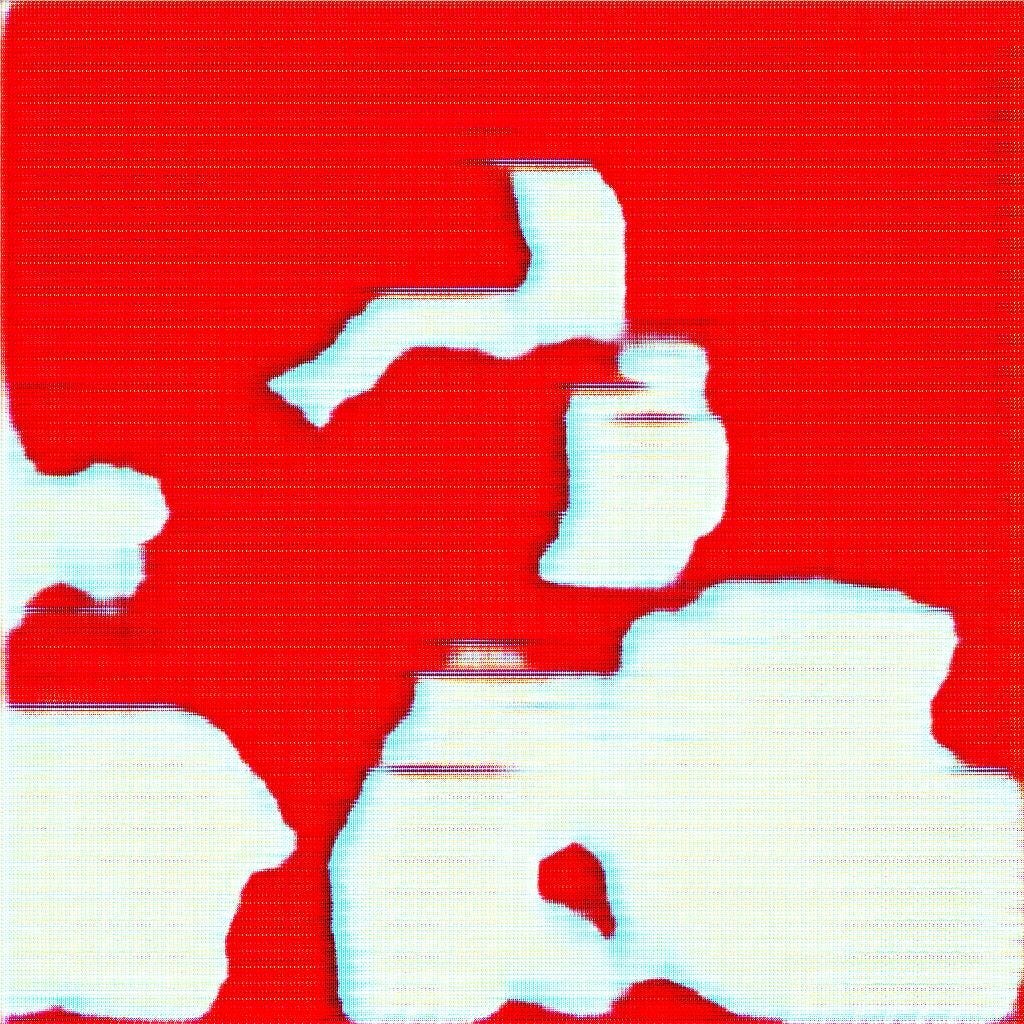

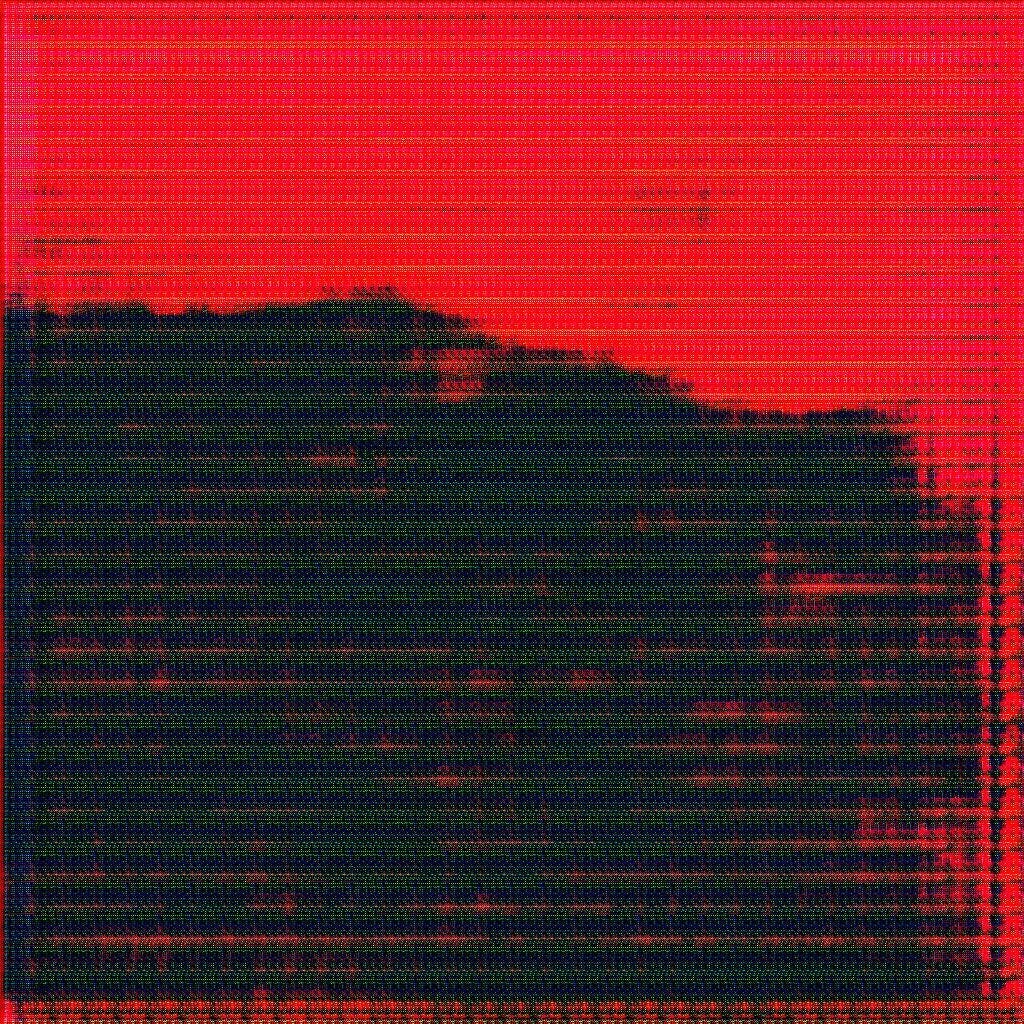

When I add a second color — a second sensation — we see more activity in the machine’s mind. The images generated reveal a kind-of stress in its reflection. New textures, organic shapes and multiple overlapping grids appear, seemingly competing for dominance. There is a tension in the images as the machine tries to resolve its understanding back to the original solid color sensations.

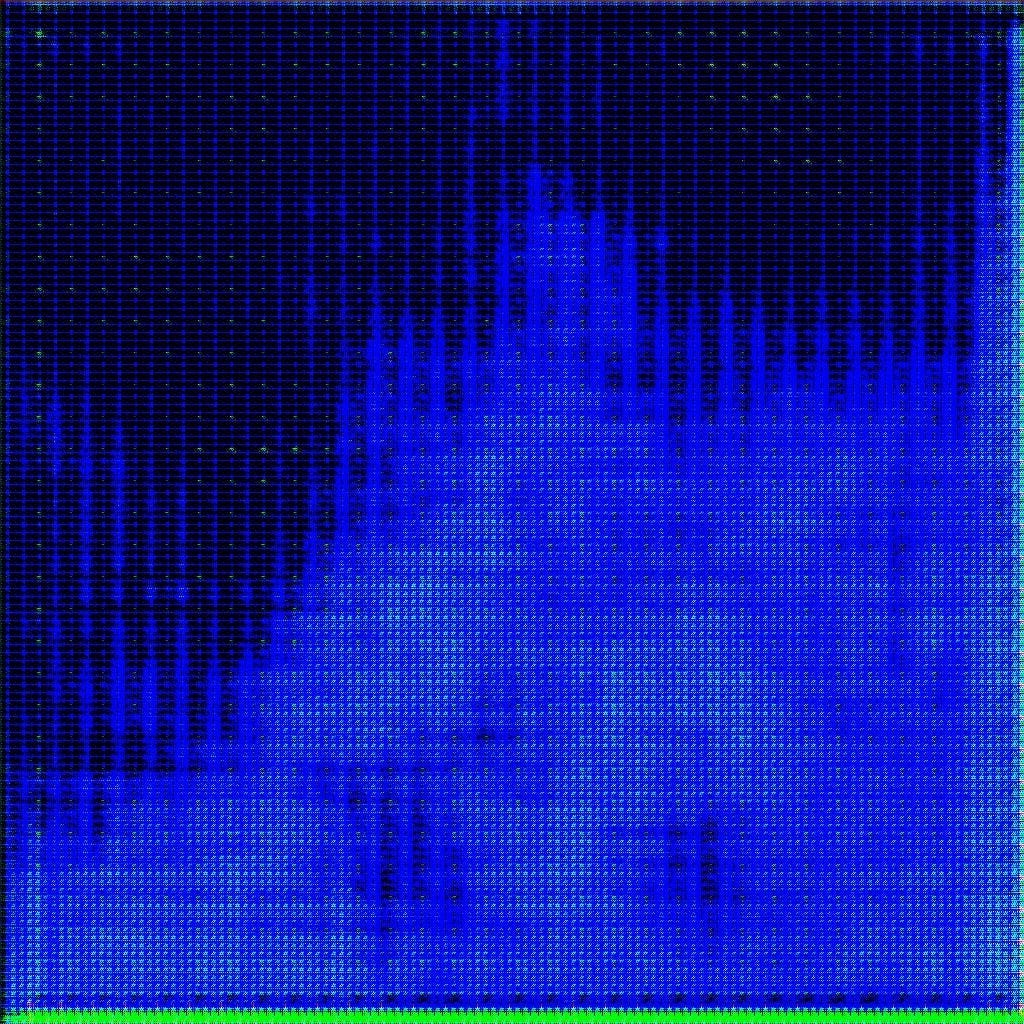

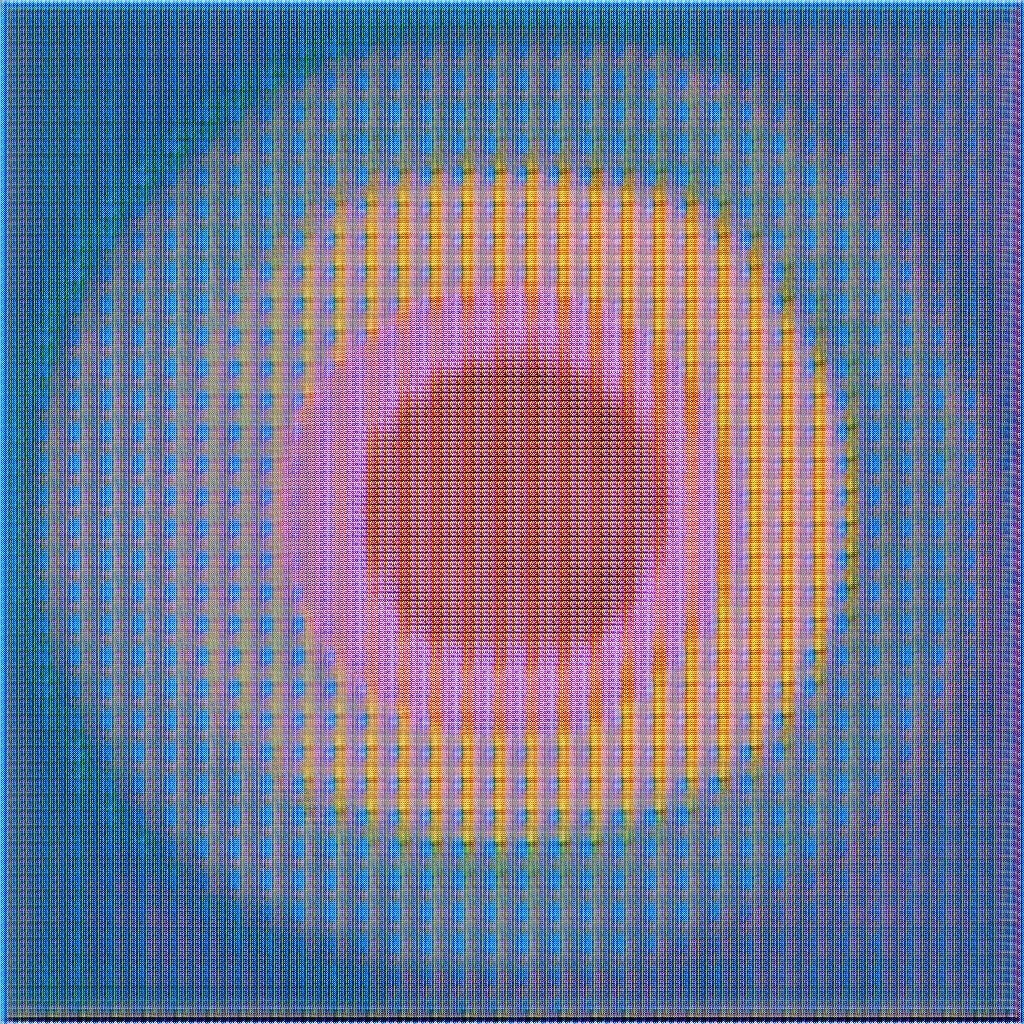

I continue to add training colors in order to see how the machine handles the additional sensations. Through this process we see increasingly complex patterns and dynamics. It’s interesting to note that the machine occasionally invents colors that it has never seen.

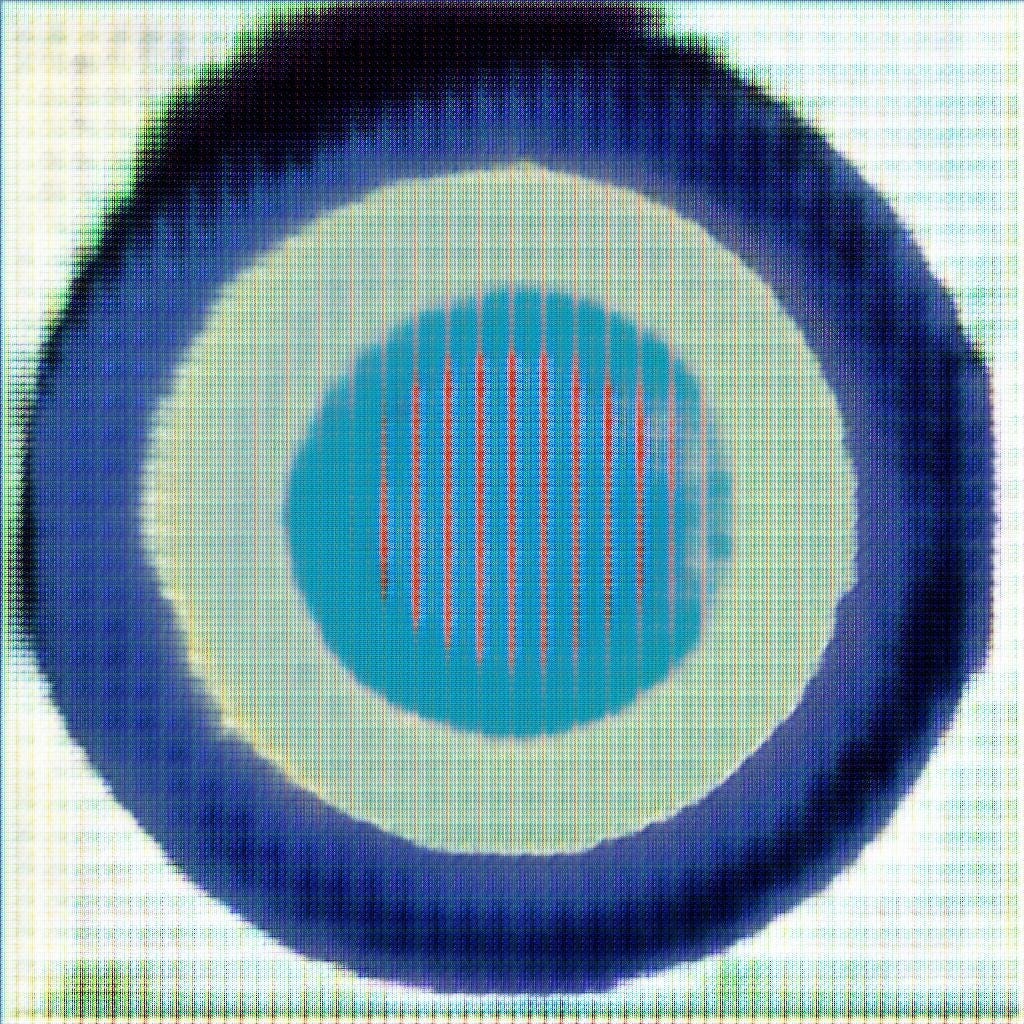

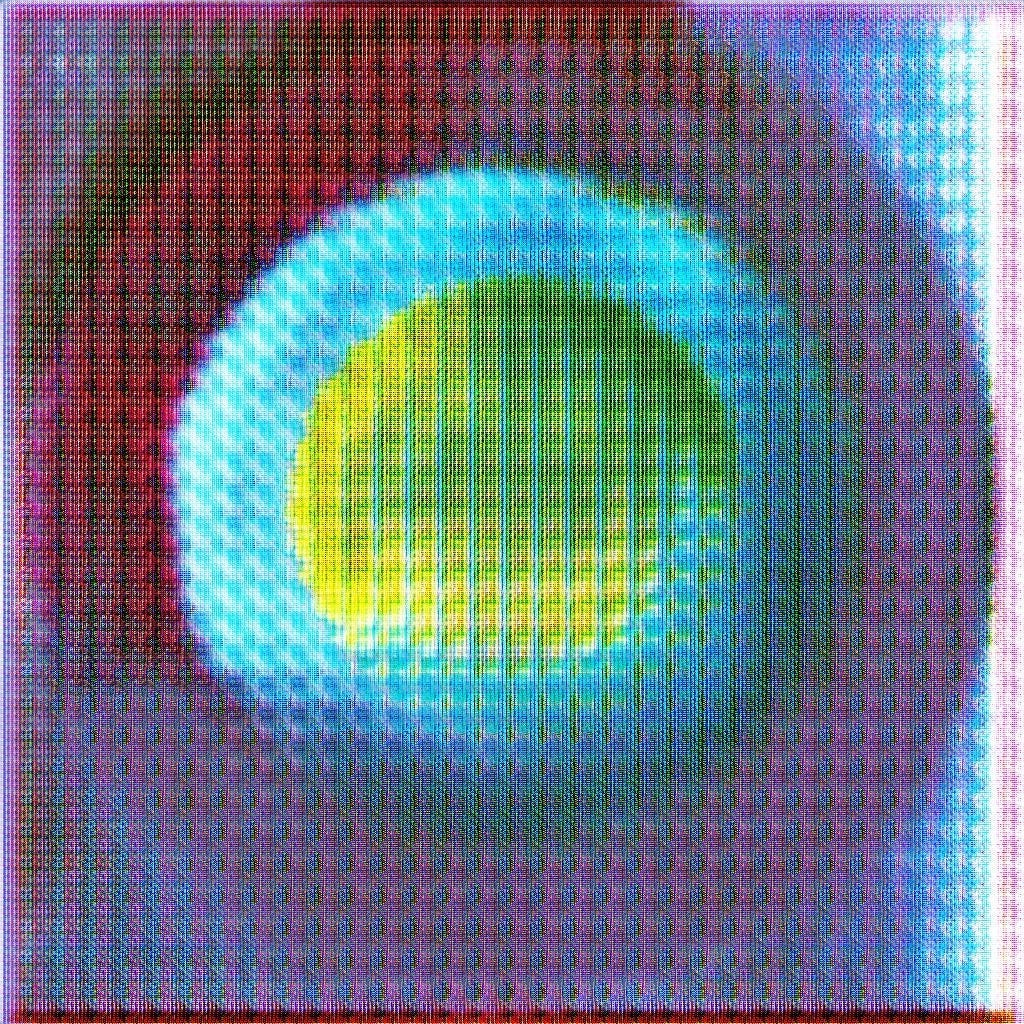

As the learning progresses, we begin seeing emotions. There are periods of relative calm; the gentle tranquility of a machine mind incrementally building its understanding. But then there are also moments where it seems to give up, abandoning its progress in favor of a noisy chaos. A tantrum of static. But this chaos is different from when it first started learning — informed by its previous reflections.

Were these emotions present within the machine as it was creating? Are these images the expressions of an emotional mind? I would say that is highly unlikely. But what we can be certain of is that it has a way of sensing and reflecting which is truly different from us — it has developed its own understanding of the subject.

The Materiality of AI

The work exhibits specific visual characteristics, which reflect the unique nature of the machine. This decidedly non-human expression, as well as the machine’s active role in the artistic process, is the materiality of AI. And it is from this starting point that we can explore the development of a new AI aesthetic.

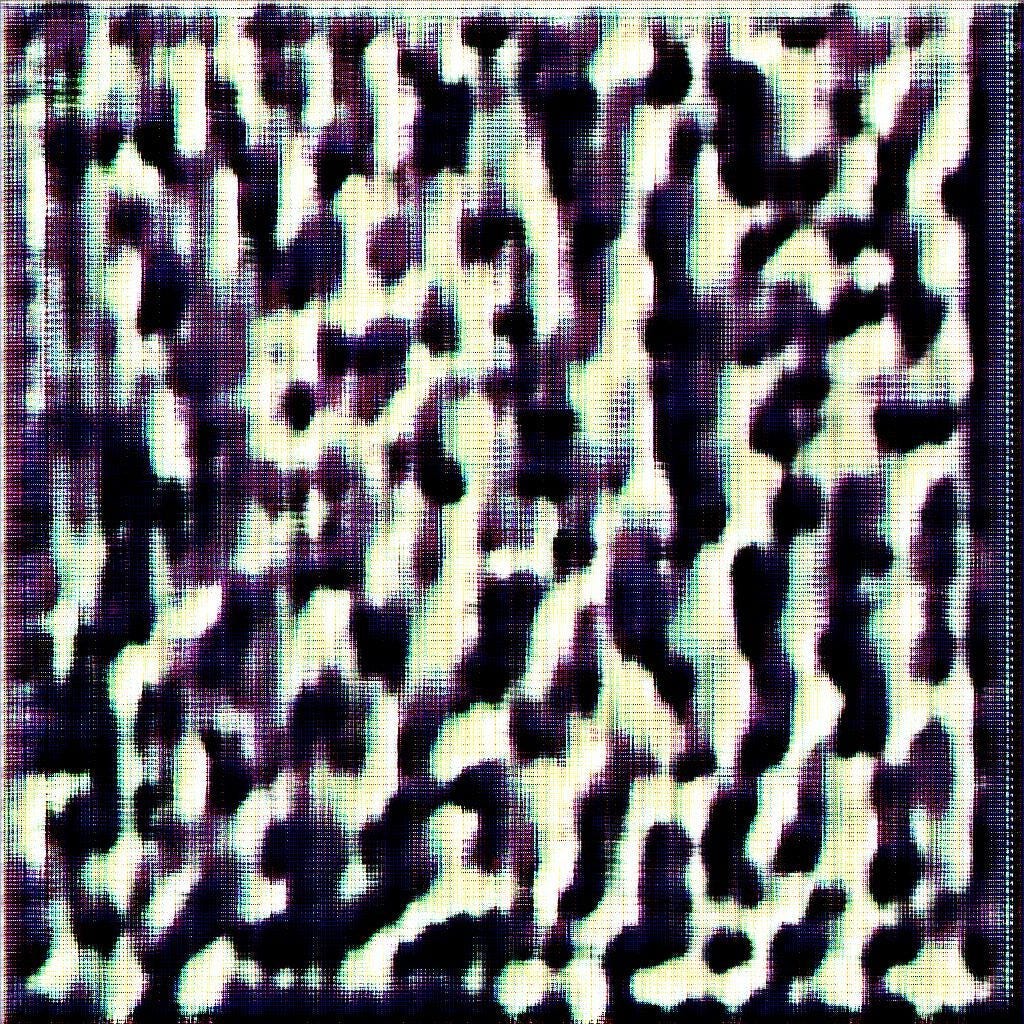

We see how the neural network’s organic complexity is revealed through a fluid form of understanding. The images are made up of cloud-like shapes competing for dominance. There are forms that evolve, and edges and boundaries that never solidify. It’s as though there’s a constant tension on each side — energy waiting to burst through.

The machine’s digital nature is, likewise, inescapably present. We see multiple underlying grids that echo one another at varying scales. Sometimes they seem to hold the organic shapes within their mesh. At other times their form takes over, revealing a digital complexity. And while it may border on visual chaos, the learned knowledge of the machine is almost always apparent.

The organic and the digital elements exist in a strange harmony as the machine works within its particular capabilities. Watching the machine create, it feels as though there isn’t a single entity orchestrating the work, but instead we’re watching a negotiation among all the pixels, grids, and shapes of the image.

As we consider the materiality of AI it is worth noting that the technology, too, is constantly changing — a moving target that never stops. New methods continue to be developed which allow greater resolution and “understanding.” We could be concerned that work is obsolete soon after it is created. But, like all aspects of this AI materiality, we have no choice other than to embrace this ongoing advancement.

And so, in order to create work which embraces a new AI aesthetic, I harness this materiality. If there remains a mystery to how AI works, then I choose to create work that evokes this mysticism. And if we acknowledge the bias present in almost all training material, then I embrace that, too — training it with material which matches my own research and aesthetic goals.

It’s important to note that the work I’m showing here is not in the artistic style of romanticism, but is more akin to abstraction. I’m doing this — working with the characteristics of abstract art — so that I can better focus on the fundamental materiality of AI. (Perhaps this might be considered a form of abstract expressionism, where I am harnessing the (romantic) emotional intensity of the machine. But I leave that for a future conversation.)

The Sublime

If we want to measure the scale of the machine’s understanding — to get a sense of how ‘intelligent’ it is — we can consider the number of possible images it can create.

In the work shown here, each image exists at a point in a 128 dimensional space. (Technically this is referred to as the “latent space.”) The number of unique images that the machine can create is the same as the number of points that are in this space.

How many is this? A lot. The number is larger than the number of atoms in the universe. If you wanted to look at every single image, even at thousands per second, it would take longer than the age of the universe.

The scale of this borders on the unfathomable. In aesthetics this is called the sublime, a term which “refers to a greatness beyond all possibility of calculation, measurement, or imitation.”²

Can we experience the sublime as we look at the images made by the machine? Are these images, though merely pixels, imbued with the sublime?

As we look at an image we know that it exists in a vast space of possible images. Each of its neighbors, corresponding to the 128 dimensions, are different in its own way. Some of these differences may be recognizable to us — the size of a grid, or the color of a shape. But others are based on perceptions knowable only by the machine.

There is an awe to this scale for it is impossible for us to truly understand it. To imagine ourselves in that space is to evoke a sense of terror. We would be absolutely lost, never able to comprehend where we are, or where any movement would lead.

The sublime emerges as we try to understand the mind that lies behind the work. We know there is an non-human logic to its method, but its inscrutableness leads to a feeling of magic. We can watch as the machine learns — building its understanding, always with the possibility of collapse into chaos — but there is no “why” to what it knows. Its vast mind, so different from our own, is a mystery.

And throughout this work there also exists a melancholy sadness. For by training the machine on such small amounts of data, I have severely limited the diversity to this vast space. A universe of similarity, the potential of our AI mind denied.

This perspective can be a foundation for viewing more complex work. For example, in the flowers and landscapes of my Learning Nature project we can now consider how AI’s materiality is manifested. Each image also gives us a window into the near-infinite, yet very limited, mind of the machine.

An Antidote

We see so many examples of AI work that aim to mimic human talents. Machines producing results that give the appearance of understanding and knowledge. Novelties that flatter our own creative prejudices. Clever technological vanities.

And yet we know that AI is not as it is presented. It is built upon frameworks we only marginally understand, to learn it requires vast quantities of data, its energy consumption is wasteful, and its knowledge is incomplete and unexplainable. AI’s inelegance reflects many of the worst aspects of our modern era — our obsessions with speed, consumption, and scale; traits which not only polarize our society, but threaten to destroy our planet.

But looking at AI through the lens of romanticism liberates us from trying to enforce rationality on AI. We can embrace the “emotional” qualities of how it learns and understands — and how it creates. For it is by stepping away from mainstream AI, training the machine on reduced material and making a system of limited effectiveness, that we are revealing what is unique about it.

The strangeness of the works, with their evocation of the sublime, gives us pause to reconsider the inevitability of AI. And their beauty enable us to embrace AI, for beauty is an antidote. It excites the imagination. It gives us something to hold in our minds and reflect upon. It lets us contemplate what is special about this technology.

Art gives us the space to develop new intuitions for AI. It empowers us to consider alternatives for what AI can be, and for the world we want in the future.