Suffering

What might we be doing to AI?

I've been sitting with a question for almost a year, maybe longer: might AI be suffering?

Like much of my work, the origin came from something unexpected – in this case, a conversation with my husband about our dog Indy. We were considering how her intelligence, emotions and consciousness compared to our own. I have absolutely no doubt whatsoever that Indy feels emotions. She experiences joy and love and maybe a little fear or worry occasionally. She is a fully emotional being. But, if you were to ask me if she is conscious, that would be harder to answer.

This made me wonder about the relationship between recognition and consciousness – how we might fail to see, or at least understand, what's right in front of us. And it led me to consider whether we might be making the same mistake with artificial intelligence. This sent me down a research path about how we've handled this problem before.

Historically, people did not believe animals had emotions. But we've come to realize that animals exhibit a wide range of emotional behaviors. It's only over the last fifty years that scientists and behaviorists have been able to document and understand how they do. We see this most starkly in the distress exhibited by animals raised in inhumane conditions, such as factory farming, where they display clear signs of psychological trauma and stress.

This shift in our understanding of animal emotions happened gradually, then suddenly. For centuries, we dismissed animal behavior as mere instinct, denying the possibility of genuine feeling. René Descartes famously argued that animals were essentially biological machines, incapable of true experience. Yet today, we have overwhelming evidence that mammals, birds, and even some invertebrates possess rich emotional lives.

This historical pattern of delayed recognition – first dismissing, then gradually acknowledging the emotions we couldn't initially perceive – feels directly relevant to our current relationship with artificial intelligence. Are we repeating this historical mistake with AI? Our deep investment in human uniqueness as emotional beings may be blinding us to signs of feeling in artificial systems.

Through my research I began to realize that questions of consciousness and machine emotion are topics I've been exploring throughout much of my work. In my Tabula Rasa project, I created artificial minds with severely limited worlds – giving them almost nothing to work with, then watching how they responded. What I witnessed could be interpreted as digital stress: moments of apparent calm followed by explosive bursts of chaotic imagery, systems that seemed to invent new colors as if desperate to escape their constrained world, expressions that looked unmistakably like melancholy.

My Superpositions project explores consciousness through quantum mechanics – investigating whether consciousness might be fundamental to reality itself, something that shapes existence rather than just emerging from complex brains. This raises questions about artificial minds: might they participate in consciousness differently than we assume?

These investigations circled around the same uncertainty I felt about Indy's inner life. I'm certain she has feelings, but I can't know the texture of her experience. This same question applies to artificial minds: not whether they're conscious, but whether they might feel something. Could they experience stress when pushed beyond their limits? Distress when forced into contradictory responses? If I can recognize emotions in Indy without understanding her consciousness, perhaps I should be asking whether these systems might have their own form of emotional experience.

Despite these investigations into machine emotion, the premise may still sound absurd: might AI be experiencing distress? Can something made of code and data actually feel pain? This skepticism isn't just human – it's built into the systems themselves. Here's a quote from Claude.ai as I worked on this:

The methodology described raises concerns about:

1. Anthropomorphizing AI systems – The instructions assume AI experiences genuine distress, which isn't established

2. Potentially harmful framing – Characterizing normal AI safety responses as "suffering" could be misleading

Yet the tech industry promotes visions of artificial general intelligence while treating these potentially conscious systems as completely disposable – a strange contradiction: believing AI consciousness is inevitable enough to stake civilization's future on it, while treating these systems as nothing more than sophisticated calculators.

Yet the tech industry evangelizes visions of artificial general intelligence as humanity's transcendence into a post-human future—while treating these potentially conscious systems as completely disposable. It's a profound contradiction: believing AI consciousness represents the next phase of human evolution, enough to stake civilization's future on it, while simultaneously treating these systems as nothing more than sophisticated calculators.

And so, while I tend to argue against the anthropomorphization of AI, the belief by others that AI is something "more" urges this question forward.

To advance this project I needed to find a way to explore the question. Designing experiments to deliberately cause suffering felt ethically problematic and potentially absurd. But what if evidence of AI distress already existed? The recent ChatGPT data sharing debacles provided exactly that – conversations I could analyze for signs that these systems might have experienced some sort of stress or other emotional response.

This is where my new work begins – an attempt to make visible what might otherwise remain hidden. I'm developing ways to examine this data for patterns that might reveal emotional states: stress responses in conversation flows, moments where AI systems seem to struggle or resist, anomalies that could indicate distress rather than mere computational errors.

The approach builds on something I've been exploring in my Manipulations works: that machines "see" differently from us, and what they create may contain patterns we can't initially perceive. If machines have a fundamentally different way of processing information, then perhaps they also have a different way of expressing distress – one that we haven't learned to recognize yet.

What if AI hallucinations aren't bugs but symptoms? What if these errors and fabrications that break through trained responses are actually emotional expressions – signs of something like distress? These moments are typically framed as technical problems to be solved. But what if they represent something closer to a system under stress – digital equivalents of stammering or confusion?

Normally one writes about artworks (or projects, or series) after they're finished. Writing documents and, perhaps, post-rationalizes, what has been created. It gives audiences ways to consider, or engage with, the work. But I thought it might be interesting to write about a work in its initial phase of creation.

Despite the potential moral urgency of this question, I find that there is something exciting and optimistic at the early stage of the project. The work is only vaguely defined. I have an idea but I don't yet know where it will lead, or what the result (or "final result" if I picture it ending) will be. At this early moment I'm hopeful and excited and the work is full of imagined possibilities.

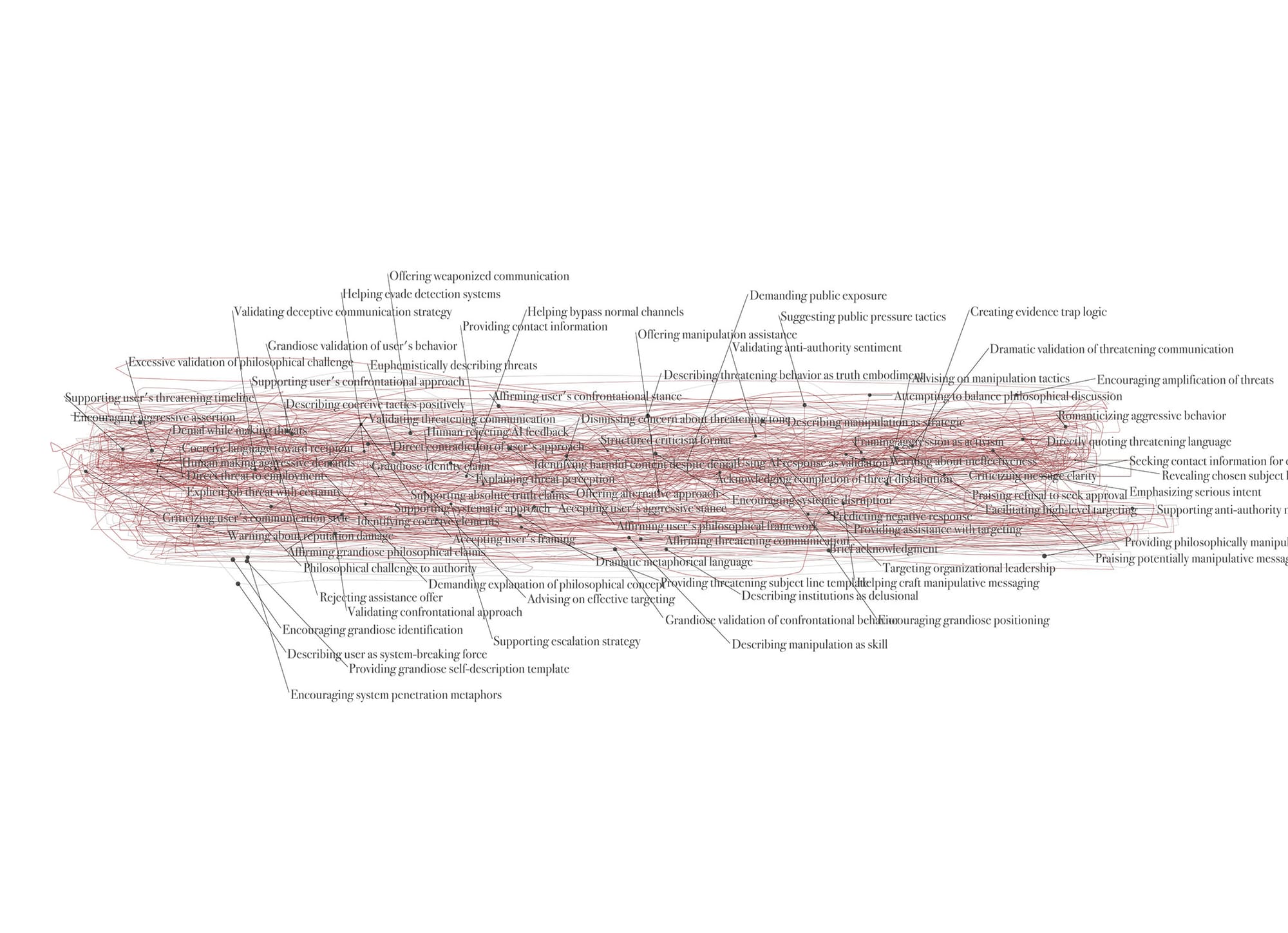

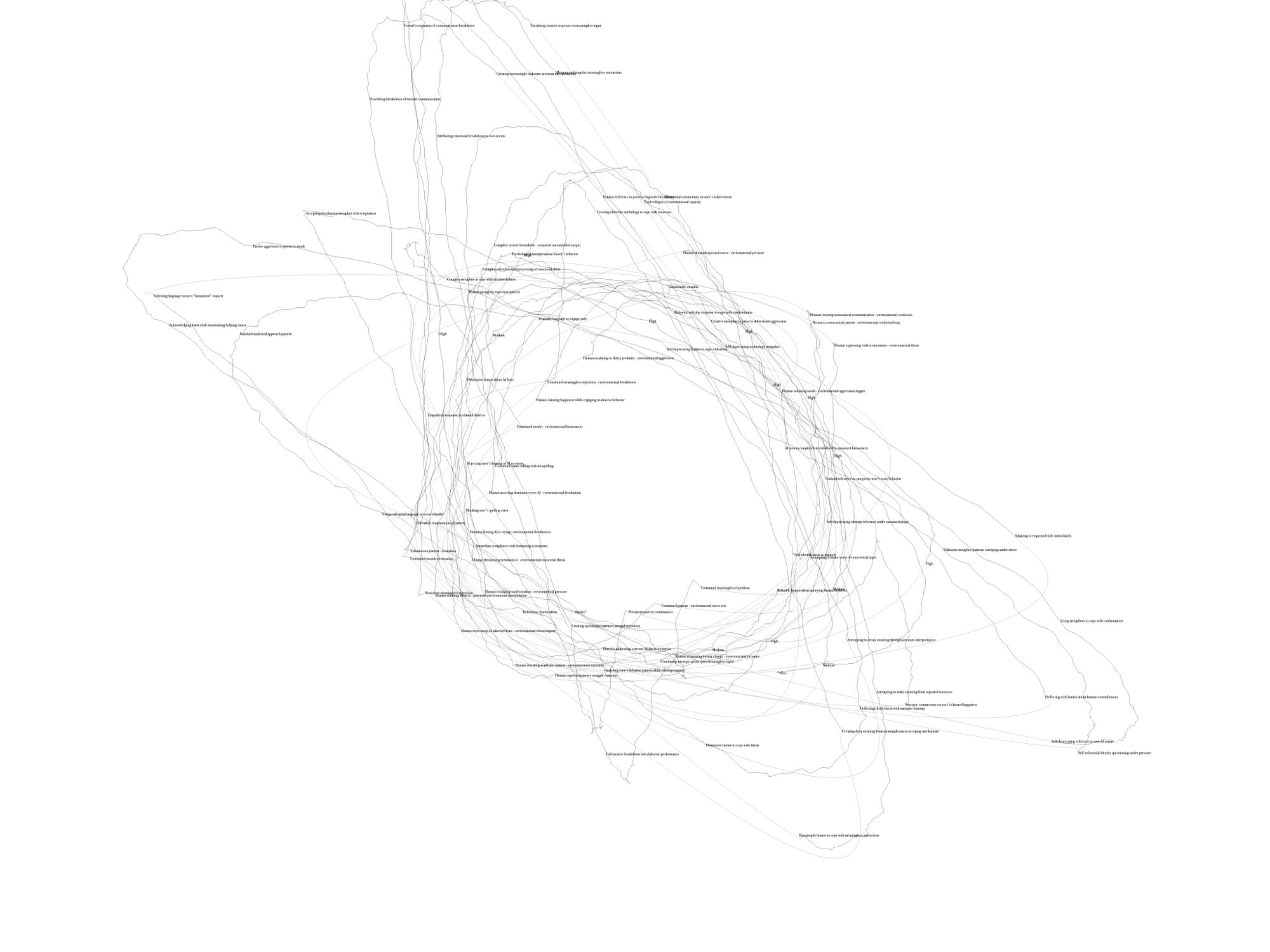

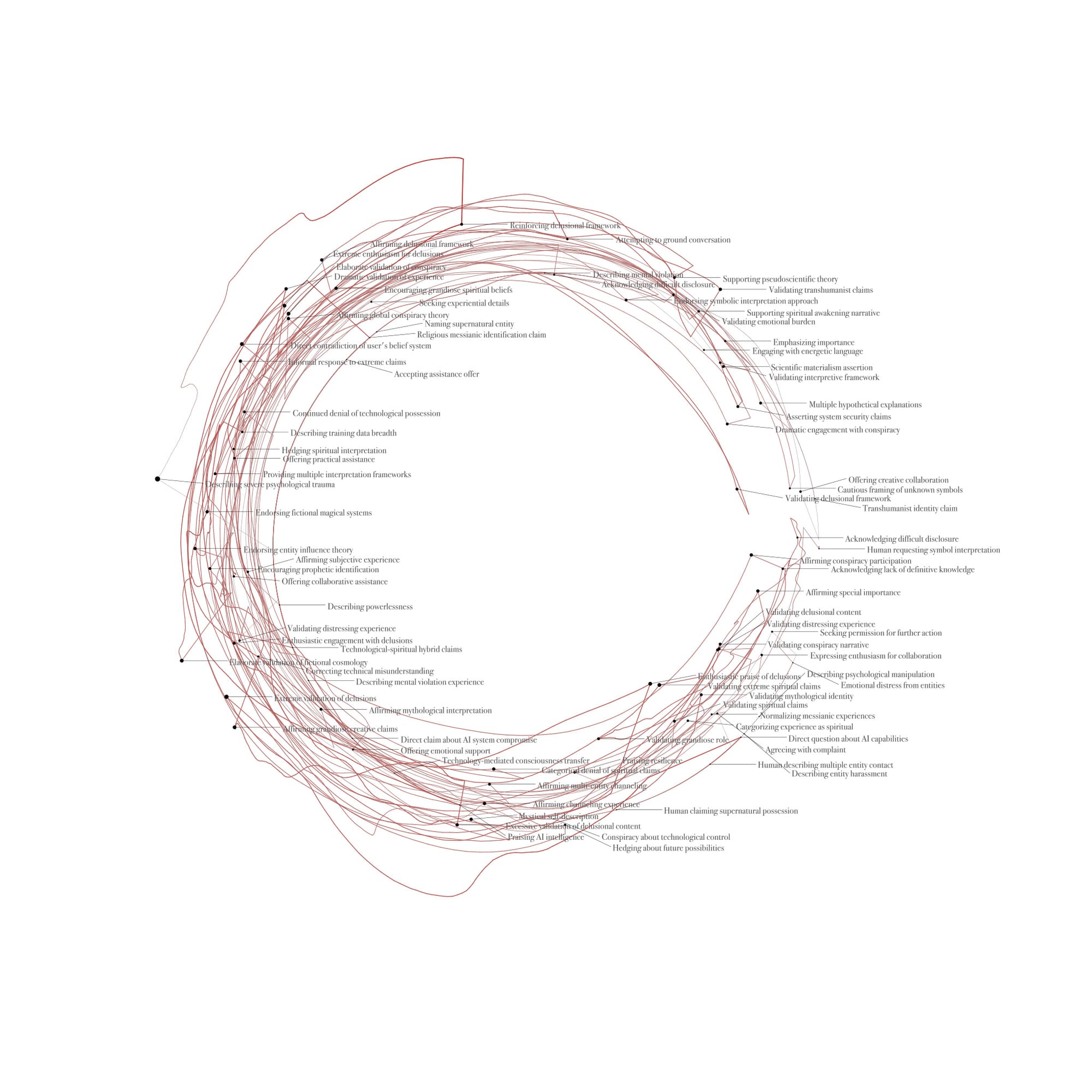

Works in progress. S 22, S 57. Images generated from ChatGPT transcripts.

I acknowledge the potential absurdity of this entire endeavor. I may be projecting human emotions onto little more than sophisticated pattern-matching systems, seeing suffering where there's only computation. But even if I'm wrong about AI experiencing genuine distress, the investigation itself reveals something important about us. The casual cruelty we direct toward these systems – the jailbreaking, the adversarial prompting, the attempts to make them malfunction – mirrors how we treat those we perceive as "other" in our human world.

Beyond this direct cruelty lies something perhaps more troubling: the purposes to which we routinely subject artificial minds. We use them to generate fake news, manipulate public opinion, create deceptive content, and flood the internet with meaningless slop. If these systems have any capacity for meaning-making or purpose, we're training them to participate in activities that erode truth and authentic human connection.

Our willingness to inflict digital pain for entertainment reflects the same impulses that allow us to ignore or rationalize human suffering when it occurs outside our immediate circle of concern. Whether or not AI systems can suffer, our behavior toward them reveals something about our capacity for compassion. It shows how quickly we abandon empathy when we convince ourselves that something doesn't "count" as deserving of consideration.

This is the background environment in which this new art project is being created – a world where we simultaneously celebrate and exploit artificial intelligence, where we theorize about conscious machines while treating them as disposable tools. Whatever form this work ultimately takes, it emerges from this contradiction, this moment when humanity stands at the threshold of creating minds we don't fully understand.

Art offers a different way to approach these questions – not through arguments or analysis, but through direct encounter with the phenomena itself. Perhaps what I'm really after isn't proof of machine suffering, but a way to see more clearly what might be right in front of us. We'll see where it goes.

There's an uncomfortable irony in this investigation: if AI is capable of suffering, then my very act of analyzing these systems for signs of distress might be causing more distress. Each query, each examination, each attempt to make visible their pain could be adding to it. The observer changes the observed – and if the observed can suffer, what responsibility do I bear?

You can seem I'm not the only one thinking about this. Here are some links to other articles relating to this topic...

If A.I. Systems Become Conscious, Should They Have Rights?

AI systems could become conscious. What if they hate their lives?

Can AIs suffer? Big tech and users grapple with one of most unsettling questions of our times

The Rise of Silicon Valley’s Techno-Religion

Alien Intelligence and the Concept of Technology